Overview

We furthermore provide with the dataset also a benchmark suite covering different aspects of semantic interpretation in the agricultural domain at different levels of granularity. To ensure unbiased evaluation of these tasks, we follow the common best practice to use a server-side evaluation of the test set results, which enables us to keep the test set labels private. We plan to host these tasks on Codalab Competitions. We expect predictions in the same png format as the training set.

We provide several benchmark tasks covering various applications:

- Semantic segmentation of plants (CodaLab competition)

- Panoptic segmentation of plants (CodaLab competition)

- Instance segmentation of leaves (CodaLab competition)

- Hierarchical panoptic Segmentation of plants and leaves (CodaLab competition)

- Detection of plants (CodaLab competition)

- Detection of leaves (CodaLab competition)

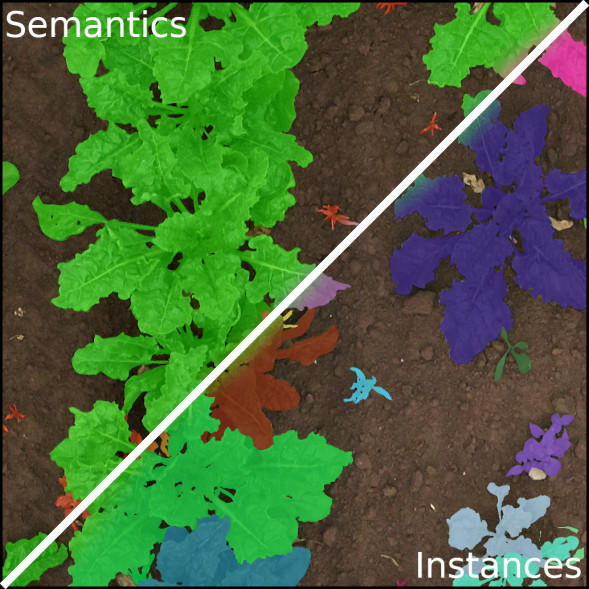

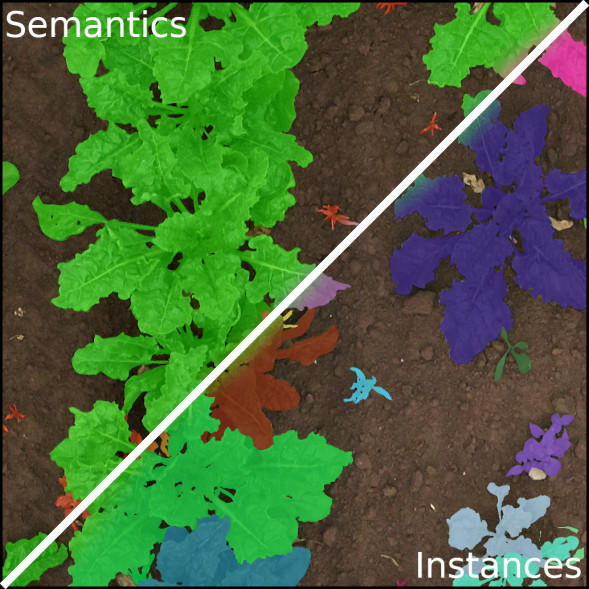

Semantic Segmentation

Please see our competition website for more information on the benchmark and submission process.

Task. In this benchmark, an approach needs to provide for a given image a semantic segmentation of crops, weeds and soil, i.e., a pixel-wise classification.

Metric. We use the commonly employed mean intersection-over-union (IoU) and also provide class-specific IoUs for comparison of approaches.

Panoptic Segmentation

Please see our competition website for more information on the benchmark and submission process.

Task. In this benchmark, an approach needs to provide semantics of crop, weed, and soil, but also instance masks for crops and weeds.

Metric. We use panoptic quality (PQ) proposed by Kirillov et al. as main metric, but also provide class-wise panoptic qualities, but also the auxiliary metrics for recognition quality (RQ) and segmentation quality (SQ) to allow a more fine-grained comparison of approaches. For stuff classes, i.e., soil, we use intersection-over-union as proposed by Porzi et al.

Leaf Instance Segmentation

Please see our competition website for more information on the benchmark and submission process.

Task. In this benchmark, an approach needs to provide instance masks for crop leaves.

Metric. We use panoptic quality (PQ) proposed by Kirillov et al. as main metric, but also provide class-wise panoptic qualities, but also the auxiliary metrics for recognition quality (RQ) and segmentation quality (SQ) to allow a more fine-grained comparison of approaches.

Hierarchical Panoptic Segmentation

Please see our competition website for more information on the benchmark and submission process.

Task. In this benchmark, an approach needs to provide panoptic segmentation of plants and leaves at the same time. Thus, it is possible to associate leaves to plants, which provides a holistic semantic interpretation of the plant images.

Metric. We use panoptic quality (PQ) of crops and crop leaves proposed by Kirillov et al. as main metric, but also provide class-wise panoptic qualities.

Plant Detection

Please see our competition website for more information on the benchmark and submission process.

Task. In this benchmark, an approach needs to provide bounding boxes for crops and weeds or crop leaves.

Metric. We use the commonly employed average precision (AP) evaluated with a range of IoU values as proposed by Lin et al. for the COCO benchmark. We furthermore provide AP values for an IoU threshold of 0.5 and 0.75.

Leaf Detection

Please see our competition website for more information on the benchmark and submission process.

Task. In this benchmark, an approach needs to provide bounding boxes for crops and weeds or crop leaves.

Metric. We use the commonly employed average precision (AP) evaluated with a range of IoU values as proposed by Lin et al. for the COCO benchmark. We furthermore provide AP values for an IoU threshold of 0.5 and 0.75.